Change is inevitable. Whether we embrace this fact or ignore it, the arrow of time does not care — it simply moves forward.

One of the fascinating examples of the passage of time is the recent evolution of Google's core algorithm.

It paints a captivating picture of what is achievable through the sheer brilliance of the human mind and creative engineering.

When Google released the news about their brand-new technology — MUM — back in May 2021, it instantly became a talking point of numerous discussions.

However, this technological breakthrough is not only an exciting topic to talk about with fellow SEO enthusiasts, but also the result of countless improvements introduced by Google throughout the years.

Two of these notable improvements — which later became the foundation MUM is built upon — are RankBrain and BERT.

This article briefly explains what these algorithms are and how they work. Moreover, it compares RankBrain, BERT, and MUM, and presents their impact on Google's core algorithm.

Join us as we embark on the journey into the world of natural language processing (NLP) and demonstrate why the latest Google algorithm update might be the most impactful one to date.

Key Takeaways

- Google's core algorithm has undergone multiple transformations throughout the years, with the most recent being the introduction of natural language processing.

- Google algorithms, simply put, are robust systems consisting of sequences upon sequences of instructions designed to provide internet users with relevant answers to their questions.

- Two of the most notable algorithms that have become the foundation for Google's latest update are RankBrain and BERT.

- RankBrain builds upon the earlier Hummingbird update, which was focused on improving natural language search. It changes Google's core algorithm to show pages that match the topic presented in the search query - even if the search engine cannot recognize phrases or words within it.

- BERT, which was introduced in 2018, applies the bidirectional training of Transformer to language modeling. It helps the machine "read" the text more like a human, instead of going word-by-word from left to right or vice versa.

- The most recent algorithm update, MUM, is 1,000 times more powerful than BERT.

What Are Google Algorithms?

Below is a very brief explanation of Google’s inner-working. We have a full article Google 101: How Search Works - please visit it if you’d like to dig into this deeper.

First, we need to understand what Google algorithms are and why they matter. Otherwise, we will be left in the dark regarding more complex issues.

Google algorithms, simply put, are robust systems consisting of sequences upon sequences of instructions designed to provide internet users with relevant answers to their questions.

They constitute a vital part of the machinery that interprets search queries and offers the results people want to see.

Besides a spider's web of diverse ranking factors, they are the paramount element that made Google's search engine what it is today — a highly effective tool that processes over 8.5 billion searches every day.

With time, the algorithms used by Google have undergone multiple transformations. One of the most significant stepping stones in the evolution of Google's algorithms was the implementation of natural language processing.

Machine learning-based NLP algorithms allowed Google Search to grasp the contextual nuances of the language better than ever before, delivering outstanding results.

What Is Natural Language Processing?

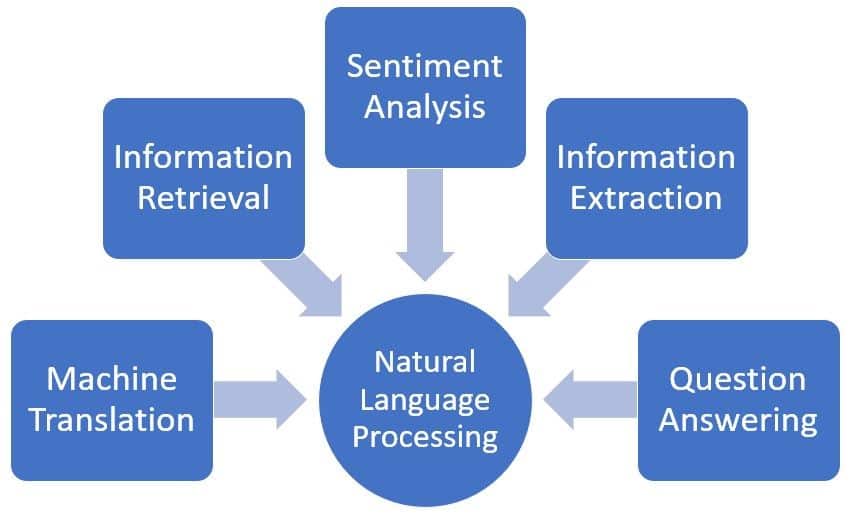

Natural language processing is a unique field of interest revolving around computer-human language interactions.

It combines linguistics, computer science, and artificial intelligence. Its primary goal is to create a computer capable of "understanding" all the intricacies of human language.

But why would we want to create such a technology? Well, we can use NLP in several ways:

- Automatic summarization — Subtracting the critical data from a given input (e.g., text, image, video).

- Translation — Transferring the meaning from one human language to another.

- Speech recognition — Converting spoken language into text.

- Optical character recognition — Recognizing printed text from an image representing said text.

- Sentiment analysis — Analyzing information to identify and study affective states (emotions).

- Text-to-speech — Converting written text into human speech.

- Topic segmentation — Dividing given text into particular groups, such as sentences, words, and topics.

- Relationship extraction — Identifying relationships between various artifacts in a given text.

- Natural language generation (NLG) — Converting information from computer databases into human language.

- Natural language understanding (NLU) — "Understanding" human language, improving reading comprehension of the machine.

- Question answering — Providing relevant answers to human language questions, including open-ended ones (such as "Should we fear death?" or "What is the meaning of life?").

Why Should We Care?

Natural language processing can be considered a symbolic "bridge" between the vast Google Search index and user input. Without it, Google Search would not exist — at least, not in the same form as we know it.

Components like topic segmentation and natural language understanding allow the system to correctly interpret the meaning of words we type into the search box.

The former helps the machine understand the hierarchical structure of language, while the latter is necessary to grasp the concepts hidden behind words and sentences.

Combined, all elements of the NLP system create a technology able to overcome the ambiguity of language. The machine recognizes words you "feed into it" and figures out how they are linked together to create meaning.

RankBrain vs. BERT vs. MUM

With basics out of the way, now we can move on to the analysis of RankBrain, BERT, and MUM. Although similar in nature, these algorithms vastly differ from each other.

| Name | Year announced | Impact on Search | Predecessor | Background |

| RankBrain | 2015 | Promoting the use of natural language while punishing sites that are artificially stuffed with keywords. | Hummingbird | Handling search queries the algorithm has never seen before. |

| BERT | 2018 | Further encouraging the use of natural language, allowing for more contextual nuance and ambiguity. | RankBrain | Improving the algorithm's understanding of language context and flow. |

| MUM | 2021 (not rolled-out yet) | Supporting the use of more than one type of content (e.g., photos, videos, text) and creating it in languages other than English. | BERT | Limiting the number of searches needed to get the information the user wants. |

RankBrain

The introduction of RankBrain was the absolute revolution in how search results are determined.

Rolled out in 2015, this AI-powered algorithm instantly made its impact felt across the internet, affecting about 15 percent of all searches.

Today, it is one of Google's most important ranking signals and affects all results.

RankBrain builds upon the earlier Hummingbird update, which was focused on improving natural language search. It changes Google's core algorithm to show pages that match the topic presented in the search query — even if the search engine cannot recognize phrases or words within it.

How does it achieve this goal?

In layman's terms, it transforms the language into easy-to-work-with vectors it later uses to determine the meaning. The answer it provides is based on two factors — contextual information and similar language.

In other words, instead of focusing on keywords themselves, the system looks at the query more holistically and uses its previously acquired knowledge to deliver the output.

Consequently, the algorithm rewards more human-like search interactions and conversations, promoting the use of long-tail keywords.

Nevertheless, what RankBrain helped to build, BERT puts to shame with its even more sophisticated approach to search queries.

BERT

While some pre-RankBrain bloggers artificially stuffed their articles with keywords to show up higher in search engine results pages (SERPs), the post-RankBrain era was relatively free of this issue. But, it still had several problems Google needed to solve.

One concern that demanded attention was the fact that Google's algorithm had difficulties with language context and flow. The efforts Google's team undertook to address this problem culminated in creating BERT — Bidirectional Encoder Representations from Transformers.

BERT applies the bidirectional training of Transformer to language modeling. It helps the machine "read" the text more like a human. Instead of going word-by-word from left to right or vice versa, it absorbs each word in a bidirectional context.

It means that Google's algorithm no longer deciphers the meaning of a word without paying attention to the words surrounding it. On the contrary, it reads the entire sequence of words at once.

It allows the machine to learn what a word means based on its surroundings.

As a result of this change, Google can provide you with relevant search results even if you make a grammatical error in your query or use terms with more than one meaning.

Since its publication in 2018, BERT opened the way for delivering more precise search results, establishing the final building block for an even more advanced search algorithm — MUM.

MUM

Even though the improvements BERT brings to the table are impressive, the ones offered by MUM could be instrumental in shaping the new age of creating and sharing information over the internet.

Implementing this algorithm is irrevocably the next big step for Google to improve its most popular service further.

According to Google themselves, the Multitask Unified Model (or MUM) is 1,000 times more powerful than BERT. Additionally, thanks to the T5 text-to-text framework, MUM can understand and generate human language better than any algorithm before it.

The main driver of creating this technology was the frequently-encountered requirement to make multiple searches to find the needed information. With MUM, this bothersome situation might soon become yesterday's news.

The MUM algorithm can recognize that the user wants to receive information not explicitly stated in the question. It uses the context of the query to determine which results are suitable and which are not.

This level of knowledge and sophistication allows it to understand that someone asking for a comparison between the two cities might want to learn more about available accommodation options, local attractions, the best places to eat, etc.

It opens up numerous possibilities and can make the whole experience much more comfortable for the user.

Furthermore, MUM can transfer knowledge between languages and is multimodal. It allows for a never-seen-before level of communication.

The user can receive information initially posted in another language than they used to make a query and put together from different formats, such as web pages, images, and videos.

Overall, it is hard not to be impressed by MUM's benefits.

Applying this algorithm will not only be vital from the SEO perspective but also completely change the way how users interact with Google Search.

Examples of The Algorithms at Work

To illustrate the advantages of the algorithm changes mentioned above, let's take a look at some examples.

This way, we can compare them and clearly see how big of an impact they made on Google's search engine.

RankBrain

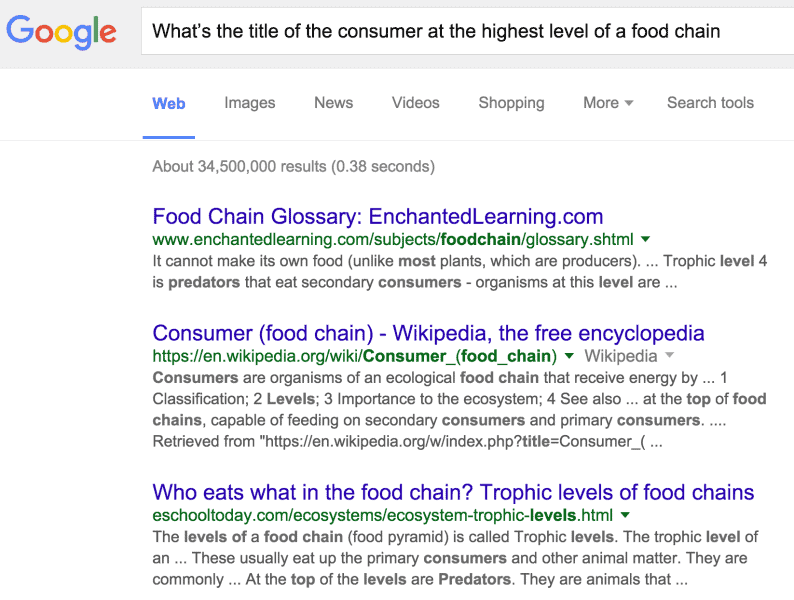

The first example we will look at demonstrates RankBrain's ability to understand search queries written in a natural language.

It shows that the algorithm can correctly respond to human-like questions, which are often convoluted and unnecessarily complex.

The query "What's the title of the consumer at the highest level of a food chain" is a long sentence written in plain language.

However, RankBrain is able to work around all the fluff surrounding the core message and present a list of (at least partially) relevant pages.

Source: https://searchengineland.com/faq-all-about-the-new-google-rankbrain-algorithm-234440

It is important to note that RankBrain understands that the word "consumer" has more than one meaning and can select the suitable one, depending on the context.

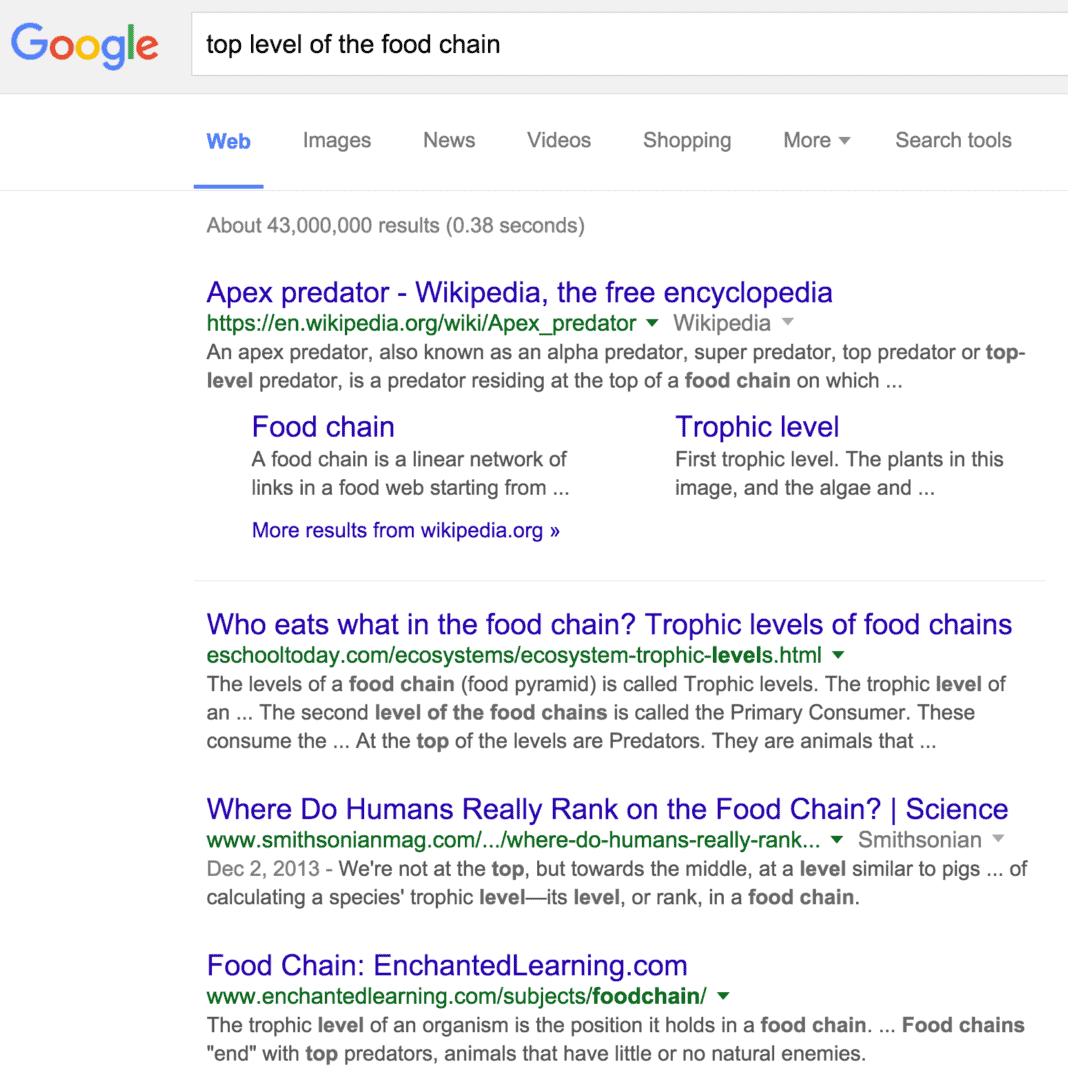

In this case, it correctly chooses the definition of a consumer as "a person or thing that eats or uses something." Still, the same type of query written in a more contained and concise manner — as expected — yields better results.

Source: https://searchengineland.com/faq-all-about-the-new-google-rankbrain-algorithm-234440

It is fascinating that RankBrain connects the first question with the second one (which it is more familiar with) and uses that knowledge to provide relevant search results.

It is the ideal presentation of RankBrain's potential to handle the question it has never seen before.

Nonetheless, it is not the definitive demonstration of RankBrain's abilities.

As a BERT predecessor, RankBrain can also deduct what the user wants to see, even though they did not mention it per se in their query.

Source: Google.com [search query “the grey console developed by Sony”)

The example presented above is proof of RankBrain's in-depth understanding of human language, with its underlying structure of connections between words and their descriptions.

It knows what the question implies based not on the keywords presented within the search query, but on the overall context of the question.

So, RankBrain uses its vast database of keywords frequently paired with "grey" and "console" to offer satisfying results. It can be described as RankBrain's "knowledge about consoles."

BERT

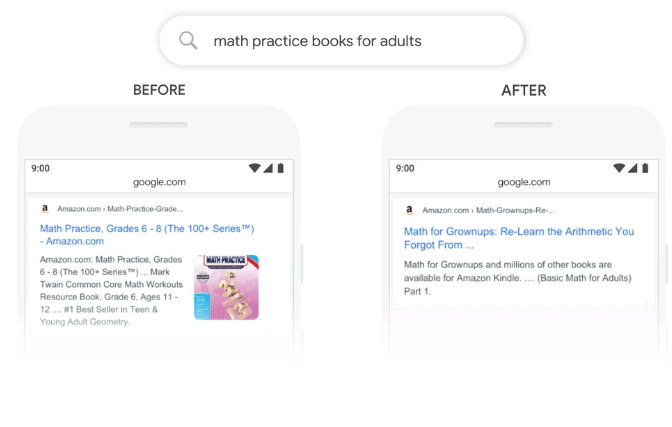

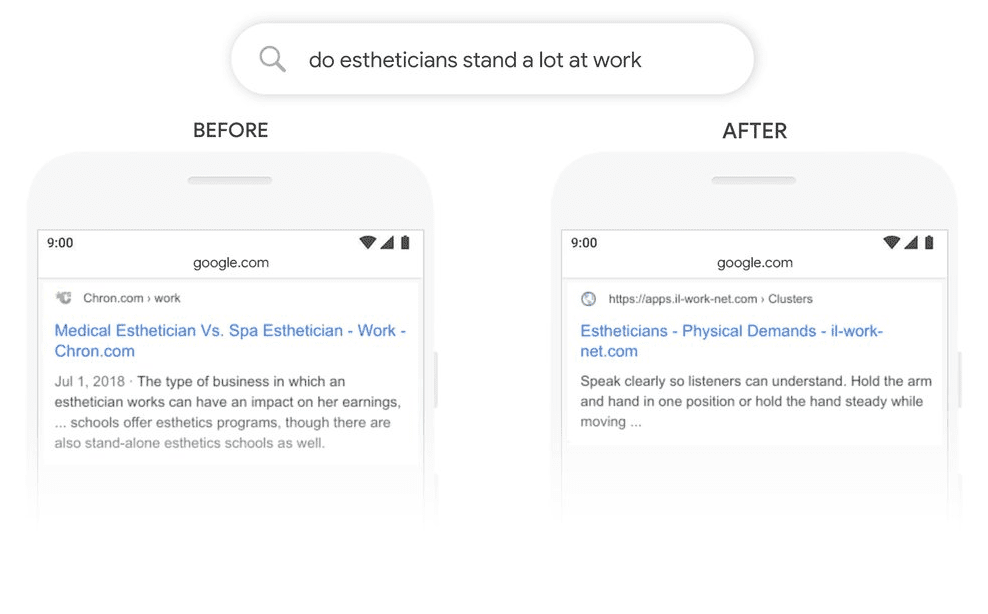

The examples of BERT in action are similarly spectacular.

The bidirectional methodology it uses allows Google Search to do things previously unthinkable. For example, let's look at how BERT improves search results for the query "math practice books for adults."

Source: https://blog.google/products/search/search-language-understanding-bert/

As you can see, BERT pays attention to the whole sentence, while the pre-BERT algorithm disregards the "for adults" part of the search query.

Thanks to this change, the user can find what they need at the top of the results page instead of scrolling down or going to the following search results page.

BERT's improved understanding of the human language also allows it to be more precise when picking the suitable meaning of keywords.

It is well presented in the example below, where the BERT model understands the word "stand" is related to the concept of the physical demands of a job and not the "stand-alone" nature of the business.

As a result, BERT offers a more practical, relevant response for the user.

Source: https://blog.google/products/search/search-language-understanding-bert/

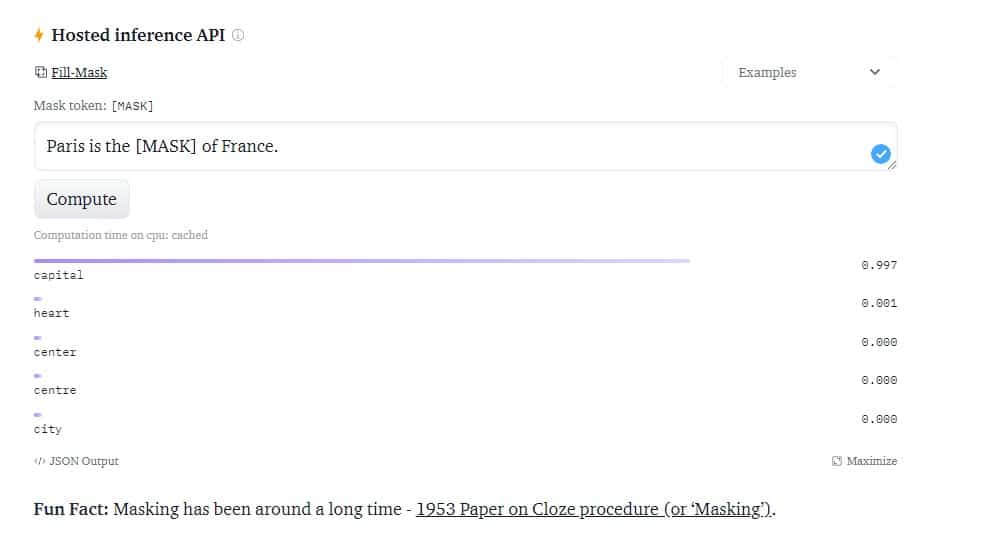

Another example of BERT's superpowers is how good it is at "guessing" what words would be most suitable for a given sentence.

BERT's masking predictions rely upon the context of the whole sentence, where other words in it serve as clues to get the right answer.

The example given above shows the unbelievable accuracy of this model.

Besides using the whole sentence to understand each word that it features, BERT also utilizes each singular word to understand the sentence's meaning.

MUM

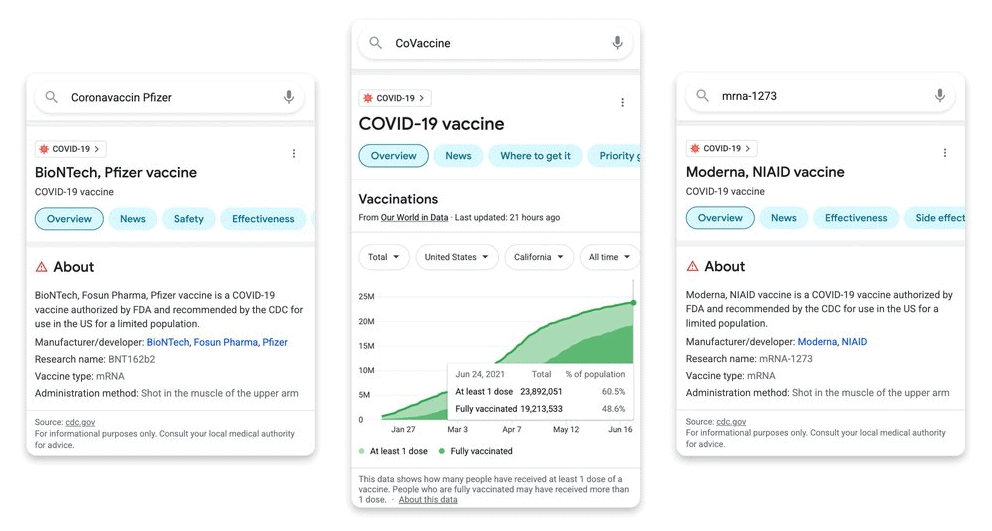

When it comes to showing MUM's capabilities, we have much less wiggle room. Because this technology is yet to be integrated into Google's core algorithm, there is much less information about its potential uses.

Google has already hinted at a few ways MUM can shape the future of search algorithms, and even tested the model to improve vaccine search results. Let's focus on the latter, as it is a real-life example of how MUM is used in practice.

Stormed with questions about coronavirus and multiple new vaccine names, Google's search engine was overwhelmed with queries it had never seen before.

To help it correctly identify phrases like "Coronavaccin Pfizer" and "CoVaccine," Google decided to lean on their new tool for help — and it has been a screaming success.

Source: https://blog.google/products/search/how-mum-improved-google-searches-vaccine-information/

At the end of the day, MUM identified 800 variations of vaccine names in over 50 languages.

What is even more impressive is that it did it within just a few seconds, allowing Google to deliver the latest trustworthy information about the vaccine almost immediately.

Thanks to MUM's knowledge transfer skills, the amount of work that would usually take weeks was done in a shorter time frame than it takes you to brew your morning coffee.

It shows how much MUM-powered features and improvements can offer once they become an integral part of Google's core algorithm.

The Bottom Line

Whenever we get comfortable with the current state of affairs, Google surprises us with something new.

The recent milestone in understanding and communicating information — MUM — only proves that the biggest company involved in search engine development is not slowing down.

RankBrain, BERT, and MUM are chief examples of what Google is capable of to make the world's information more accessible.

Soon enough, they might be the harbinger of doom for other search engines if they fail to catch up or provide better features of their own.

Whether this vision comes true or not, further growth of Google's core algorithm will undoubtedly be pleasant to watch.

Until then, we are left wondering if, one day, search engines will be sophisticated enough to understand data as the human user does.