Google is not slowing down. Its specialists are always striving to make it better – faster, safer, more helpful and relevant. Any company that cares about its online presence needs to stay up to date with the latest news and releases from the technological world, especially when it comes to search engines.

However, it’s not only about the rankings, as Google contributes significantly to other fields, such as artificial intelligence or cloud computing, open-sourcing many of its solutions so that you can use them to your own ends.

In 2018, Google published the BERT algorithm to help itself better understand users’ intent and search queries, ultimately leading to more accurate search results.

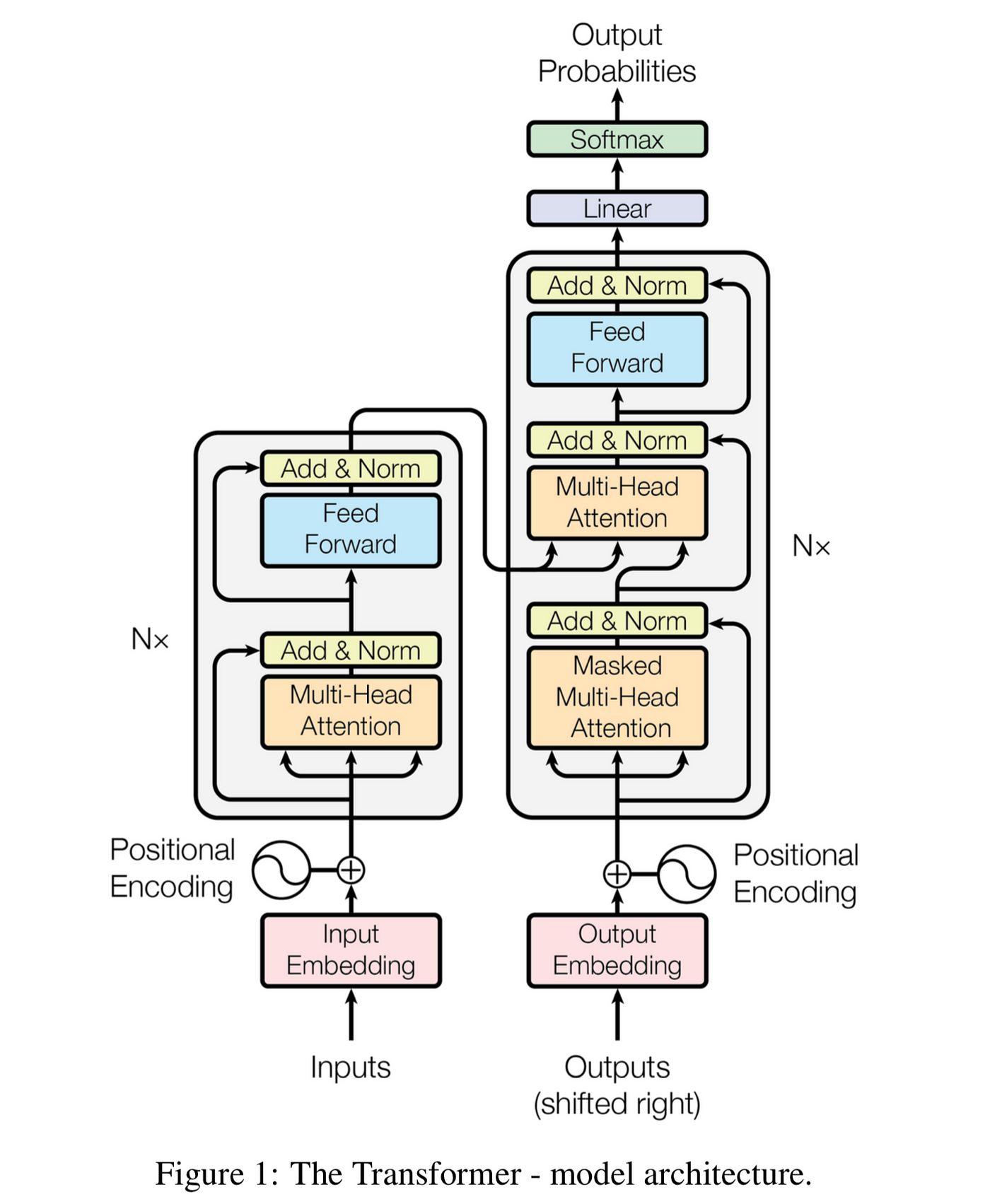

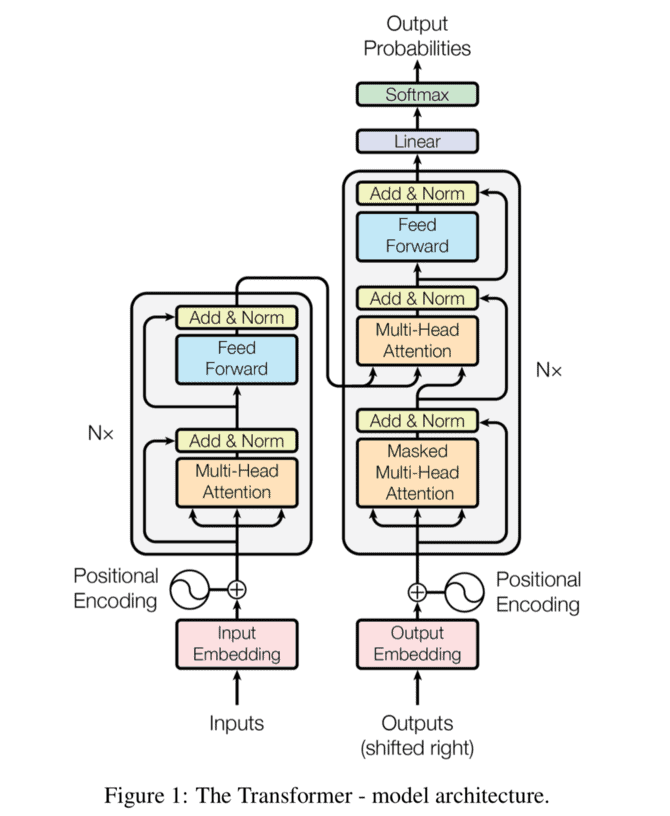

BERT’s main role and purpose are to train a transformer (an attention model) bidirectionally and apply it to language modeling, allowing the algorithm to read words, phrases and sentences as a whole, considering their meaning as well as context.

This was yet another step toward teaching machines how to understand human language.

Key Takeaways

- Google is constantly striving to make improvements to their search engine

- In 2018, they published the BERT algorithm to help them better understand users' queries and search results

- BERT is a machine learning model that is trained for language modelling and next sentence prediction

- The algorithm makes search more human-like and natural, as it understands the context of the user’s query and provides relevant results. It also helps Google better understand user intent

- Some of the benefits of BERT include improved featured snippets and more accurate voice search results.

- The algorithm is available in a variety (most) of languages.

- BERT was open-sourced by Google, meaning you can use it to your advantage and train your own state-of-art language processing system

What Is BERT?

Bidirectional Encoder Representations from Transformers (BERT) is a machine learning neural network-based model, or framework to be precise, for natural language processing (NLP). It was developed and published by Google in 2018, and a year later, it was announced that BERT started to be leveraged in the search engine. BERT is also an academic paper and research project.

BERT is a transformer language model trained for language modeling and next sentence prediction. Its performance on natural language understanding is state-of-the-art, yet not fully understood.

Source: https://medium.com/inside-machine-learning/what-is-a-transformer-d07dd1fbec04

It was developed to allow a better understanding of the context of words and thus users’ queries, enabling the deliverance of more relevant search results. Though it’s not perfect, as even Google admits, it’s an important step forward.

According to Pandu Nayak, Google Fellow and Vice President of Search, ‘language understanding remains an ongoing challenge, and it keeps us motivated to continue to improve Search. We’re always getting better and working to find the meaning in – and most helpful information for – every query you send our way.’

A great advantage of BERT is that it works in a variety of languages.

What’s more, it’s capable of learning from one language and applying that knowledge to another, helping Google provide users from all around the world with relevant search results.

NLP and Neural Network

Natural language processing (NLP) is an artificial intelligence field that combines AI methods and capabilities with computer science and linguistics. It analyzes and synthesizes human communication to enable computers to extend their knowledge of languages and the way people put words together into context.

Neural network is a machine learning model that is inspired by the human brain. It is a system of interconnected “neurons” that processes information based on their connections and similarities.

A neural network is made up of layers, and each layer is composed of individual neurons that have their own weights and biases. The overall function of a neural network is to take in data (such as text or images) and output a result (such as the classification of an image).

How Does BERT Work?

Transfer learning refers to the process of pre-training and then enhancing the neural network model on a specific task to create a highly specialized model.

In this case, BERT takes advantage of transfer learning to analyze words and sentences and learn not only their meaning but also different contexts they can appear in.

As such, instead of reading and understanding and/or translating sentences part by part (left-to-right or right-to-left), it reads them as a whole, absorbing each word in a bidirectional context (‘deeply bidirectional,’ like Google calls it, ‘because the contextual representations of words start from the very bottom of a deep neural network’).

As per the example provided by Google, ‘the word “bank” would have the same context-free representation as “bank account” and “bank of the river.”

Contextual models instead generate a representation of each word that is based on the other words in the sentence.

For example, in the sentence “I accessed the bank account,” a unidirectional contextual model would represent “bank” based on “I accessed the” but not “account.” However, BERT represents “bank” using both its previous and next context — “I accessed the ... account” — starting from the very bottom of a deep neural network, making it deeply bidirectional.’

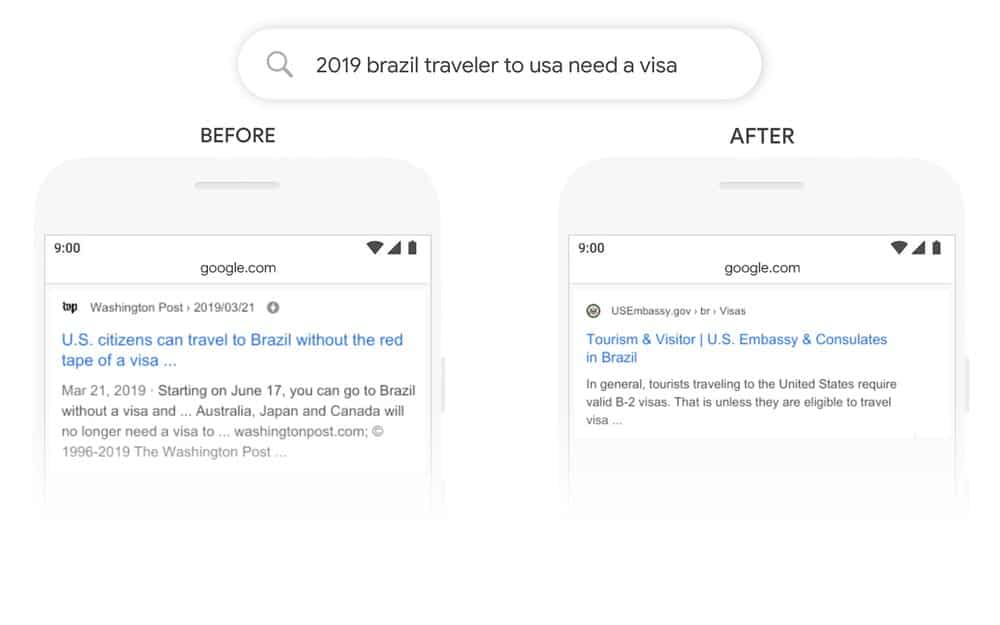

Google has provided some examples to illustrate the impact of BERT on search results.

Source: Google

In one of them, the query “2019 brazil traveler to usa need a visa” first displayed an article about Americans traveling to Brazil, so it’s actually the other way around.

After the implementation of BERT, which caused it to better understand the query as a whole and the relations between words, Google provides more accurate results, including the website of the US embassy in Brazil – where you can get all the information about a visa and apply for one if needed.

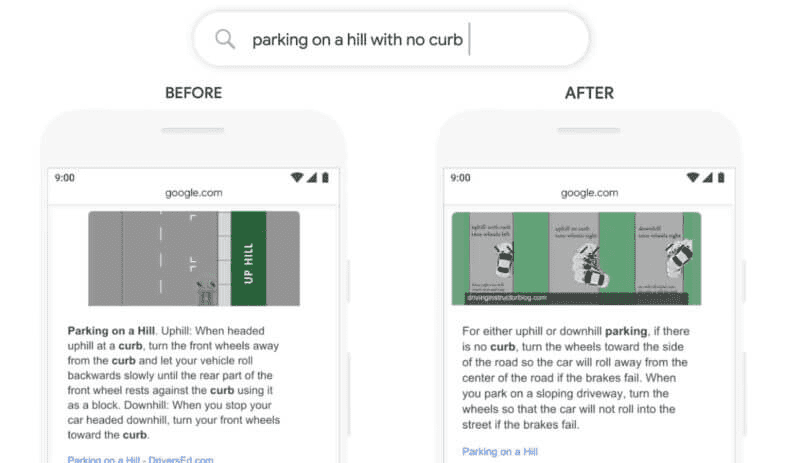

BERT also improves featured snippets wherever the feature is available. In the example below, before implementing BERT, the search results displayed advice for parking on a hill, completely ignoring the “with no curb” part.

Source: Google

To achieve these results, BERT uses two training techniques together: Masked LM and Next Sentence Prediction.

Masked LM

Masked LM, or MLM, is a machine training technique allowing for bidirectional training in various models.

To train BERT, 15% words in every sequence are replaced with [MASK] token. Then, the sequences are fed to the algorithm, which tries to predict the masked words according to the context of the rest.

For example, let’s say the sequence is “I felt sick, so I went to the [MASK]”. The algorithm will try to predict the missing word, “doctor” or “hospital”, based on the context.

"Paris is the <??> of France." <- Fill in the GAP #Google#BERT ...

— Rad Paluszak - Technical SEO Artist (@radpaluszak) May 5, 2022

- capital

- heart

- center

- centre

- city

- language

Bidirectional Encoder Representations from Transformers (BERT) can measure the probability of the masked word.

Take it for a spin :down: pic.twitter.com/LsznRdNfKw

Next Sentence Prediction

Next Sentence Prediction, or NSP, involves providing the model with a pair of sentences for it to learn how to recognize and predict whether the second sentence is actually subsequent in the original text. For it to work, around half of all the pairs are paired randomly, and the model needs to disjoin the sentences.

To provide an example, if we take the sentences “I have a dog.” and “I have a cat.”, the model should predict that these two are not related. However, if we take “I have a dog.” and “I take it for a walk.”, the model should predict that the second sentence is subsequent in the original text.  Source: https://ai.googleblog.com/2018/11/open-sourcing-bert-state-of-art-pre.html

Source: https://ai.googleblog.com/2018/11/open-sourcing-bert-state-of-art-pre.html

What Are the Benefits of the Development and Introduction of BERT?

BERT brings quite a few benefits, most of them revolving around search and the way users interact with Google.

- The algorithm makes search more human-like and natural, as it understands the context of the user’s query and provides relevant results.

- BERT also helps Google better understand user intent. This is especially important for long-tail keywords, which are usually more specific and have lower search volume but higher conversion rates.

- It paves the way for future developments in NLP and machine learning.

- It also benefits featured snippets, as it can provide more relevant and accurate information.

- When it comes to the optimization itself, there’s nothing you need to do; you benefit from more relevant search results without further optimizing your online presence.

- It’s available in a variety of languages, which helps Google deliver relevant results to users from all over the world.

- BERT also helps with voice search, as it better understands the context of the user’s query and can provide more accurate results.

- Last but not least, BERT makes search more inclusive, as it can better understand queries that are written in a more colloquial style or contain grammar mistakes.

Polysemy, Homonymy, Homographs, Homophones – the Importance of BERT

Polysemy, homonymy, homographs, and homophones are examples of linguistic phenomena that can create confusion and make it difficult for machines to understand human language.

Polysemy is the capacity for a word or phrase to have two or more meanings, e.g., the word “draw.” It can mean pulling something toward oneself or making a picture.

Homonymy is when two words have the same spelling or pronunciation but have different meanings and origins, e.g., the word “bank.” It can refer to a financial institution or the edge of a river.

Homographs are words that have the same spelling but have different meanings and pronunciations, e.g., the word “lead,” pronounced in two different ways. It can refer to a metal or to guide someone.

Homophones are words that have the same pronunciation but have different meanings and spellings, e.g., the words "their," "they're," and "there."

BERT is designed to help overcome these challenges and improve the way machines understand human language.

What Did the Introduction of BERT Mean for SEO and Link Building?

It goes without saying that such a significant change in Google's algorithm had an impact on SEO and link-building strategies.

Some of the key things to keep in mind are:

- In comparison to RankBrain, Google is now much better at understanding users’ queries and online content. It affects on-page as well as outreach content strategies; both need to be of the highest quality, relevant and natural.

- It also means that link building should be done in a more strategic and natural way, rather than with a sole focus on anchor text. Relevance and authority should be key considerations. Here, at Husky Hamster, we focus on quality link building that will help you rank higher and build brand awareness and authority at the same time.

- This has an impact on keyword research, as you need to focus on long-tail keywords that are more specific and have lower search volume but higher conversion rates.

- Moreover, bear in mind that it's not over. Enhancements and new features, such as RoBERTa or Google MUM, are being added all the time, so it's crucial to stay up to date with the latest news from Google, as the algorithm will only get better and more picky.

- Minimal requirements for content writers and copywriters are higher than ever before. It's no longer enough to be a decent writer who knows how to sneak in a keyword or two, as Google will immediately see through it and devaluate your content.

- If you want to build authority as a trustworthy expert in your field, following the E-A-T guidelines is essential, but it also got more challenging to achieve. You need to create in-depth, well-researched and quality content that will be appealing to both users and Google, providing information that isn't included in all the other pieces on the same topic.

How Can I Use BERT?

BERT was open-sourced by Google, meaning you can use it to your advantage and train your own state-of-art language processing system. It can be applied to a wide variety of tasks; all you need to do is add a layer to the core model.

BERT can be used for a variety of tasks, including text classification, entity recognition, and question answering.

Text classification is the task of assigning a label to a piece of text, such as ‘spam’ or ‘not spam’, ‘positive’ or ‘negative’, etc. For example, you can use BERT to build a text classifier

that can automatically tag support questions as ‘ technical’, ‘billing’, or ‘sales’.

Source: https://towardsdatascience.com/text-classification-with-bert-in-pytorch-887965e5820f

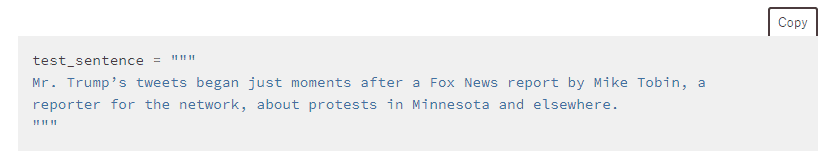

Entity recognition is the task of identifying and classifying named entities in a piece of text, such as people, places, organizations, and dates. For example, you can use BERT to build an entity recognition system that can automatically extract and classify entities from a sentence, such as ‘I need to book a flight from New York to London’.

Source: https://www.depends-on-the-definition.com/named-entity-recognition-with-bert/

Named entities refer to anything that can be classified as a proper name. The most common tags include:

- PER refers to a person.

- ORG refers to an organization.

- GEO refers to a geo-political entity.

- LOC refers to a location.

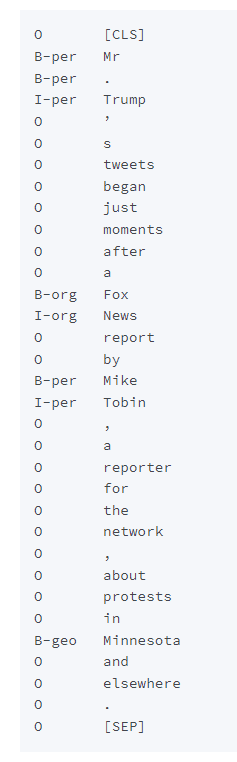

BIO tagging include B, I, and O tokens, referring to the beginning, the inside, and the outside of a span:

Source: https://web.stanford.edu/~jurafsky/slp3/slides/8_POSNER_intro_May_6_2021.pdf

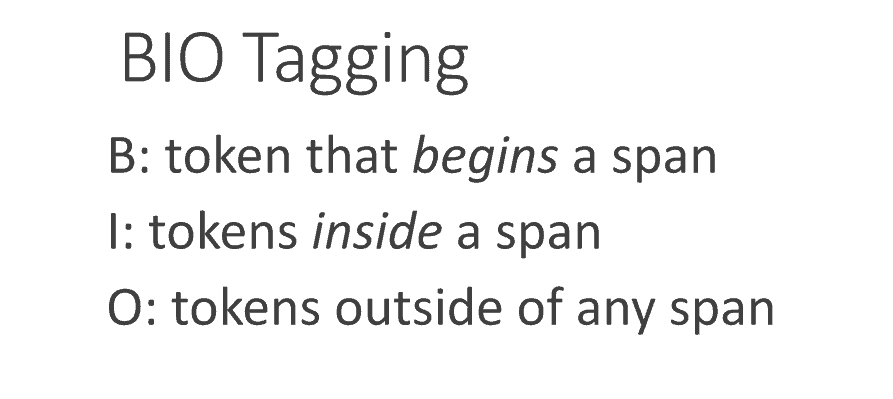

Question answering is the task of providing a short answer to a natural language question. For example, you can use BERT to build a question answering system that can answer questions about a given context, such as ‘What is the price of product X?’

Here’s an example of the working Q&A model:

Source: https://towardsdatascience.com/question-answering-with-a-fine-tuned-bert-bc4dafd45626

BERT can also change the way we go about international SEO. It helps Google better understand the context of words and queries in a variety of languages, making it possible to deliver relevant results to users from all over the world.

Final Word

The BERT algorithm was yet another step on Google’s way to perfecting search and understanding human language.

It’s not without flaws, as even Google admits, but its development was still a significant step forward in helping the search engine better understand user queries and deliver more relevant results, especially when it comes to long-tail keywords and user intent.

If you want to stay ahead of the curve, you need to understand Google algorithms and what their implications are.

Since BERT is open-sourced, you can use it to your own benefit.

After all, the better you understand how Google works, the better your chances of ranking high on the search engine and getting more traffic to your website.